Q: What are the most important hardware factors for running LLMs locally?

A:

Your GPU's VRAM and AI computing capabilities are the most important factors for local AI performance,

followed by system RAM and a modern CPU. Sufficient VRAM determines the maximum model size you can run

efficiently on your PC.

Q: Which GPUs are best for running complex local AI models (like LLaMA, GPT, Mistral,etc.) and

handling

Advanced AI workloads?

A:

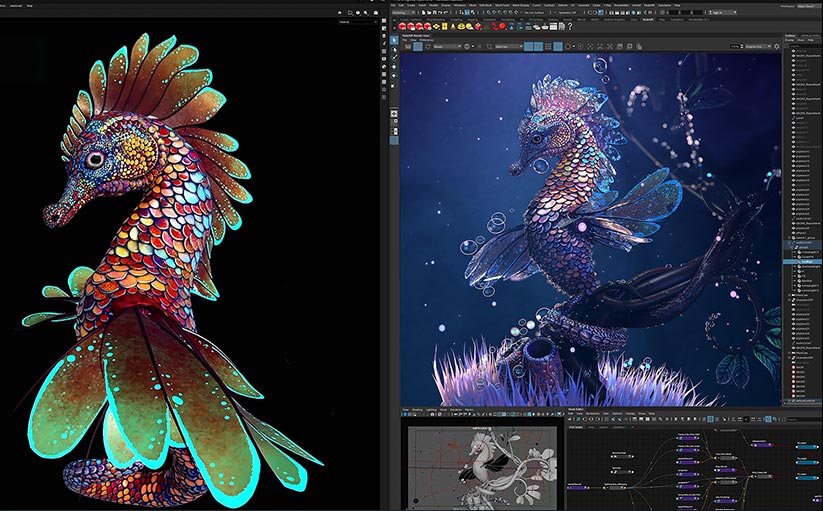

The GeForce RTX 5090 with 32GB of VRAM is one of the best GPUs on shelves today for running AI models and

handling other advanced AI workloads, thanks to their next-gen Tensor Cores. If you're looking for the best

value option, opt for a GeForce RTX 5070 Ti instead. [More detailed overview here]

Q: How do Tensor Cores improve AI performance on GeForce RTX GPUs?

A:

Tensor Cores accelerate AI workloads by performing matrix multiplications using mixed-precision

arithmetic (like FP16 or FP8) to supercharge performance without sacrificing too much accuracy. These

specialized hardware units execute these operations up to 30x faster than traditional GPU cores.

Q: How much VRAM is required for different sizes of AI models?

+

A:

Although this varies according to the precision used and differs model-to-model, 7B models typically need

8GB+, 13B models need 16GB+, and 30B models require 24–30GB VRAM. Larger models may need even more. [More

details here]

Q: Is NVIDIA or Radeon better for running AI workloads?

+

A:

NVIDIA is generally better for AI workloads thanks to its broad support, optimized libraries, richer

software ecosystem, and much more powerful GPUs with large VRAM capacities.

Q: How stressful is running AI workloads or hosting local AI on my hardware and will it damage

components?

+

A:

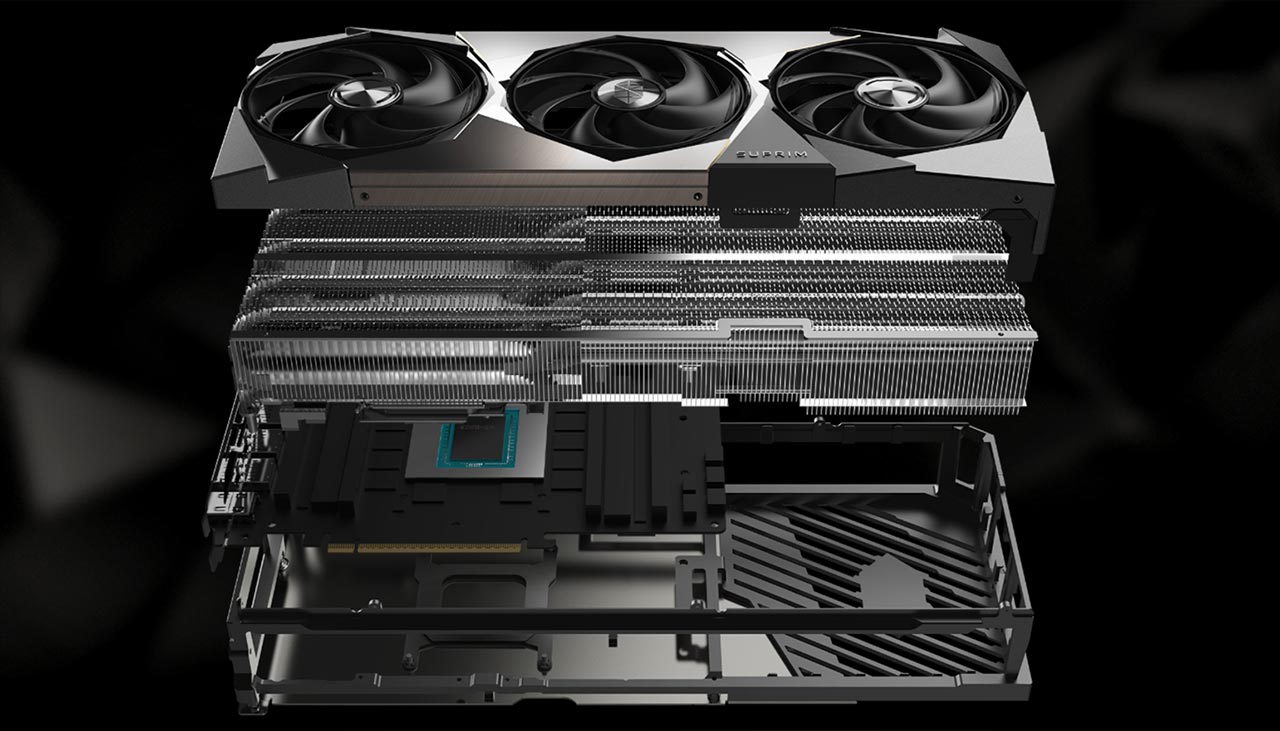

Running LLMs is similar in stress to gaming or rendering and won't damage hardware if cooling is

adequate. Occasional heavy loads are perfectly safe for modern components as long as the cooling solution

can handle heat effectively.

Q: What are the minimum PC specifications needed for running LLMs locally?

+

A:

At least 8–16GB VRAM, 32GB RAM, and a modern 6-core CPU are recommended. More RAM and VRAM will allow for

larger, more complex models, while enabling better multitasking.

Q: Is it better to use multiple lower-end GPUs or a single high-end GPU?

+

A:

A single high-end GPU is simpler and offers much better compatibility with a broad range of applications.

That said, multi-GPU setups can be more cost-effective, but you should be 100% sure that your workload's

performance scales well with multiple GPUs before going this route.

Q: How important is the CPU compared to GPU for AI performance?

+

A:

The GPU is far more relevant for inference speed and AI processing in games, creative apps, etc. The CPU

only comes into play if you're running models larger than your VRAM or using CPU-specific features in some

apps.

Q: What performance boost does DLSS get from GeForce RTX GPUs?

+

A:

DLSS on GeForce RTX GPUs can boost gaming performance by up to 2-4x by upscaling lower-resolution images and

rendering brand-new frames using AI – delivering higher frame rates and better image quality.

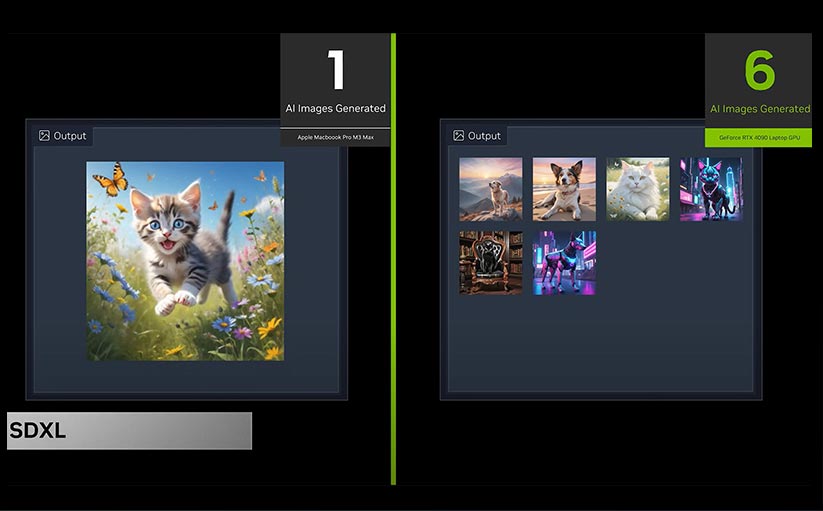

Q: How do GeForce RTX GPUs compare to Apple's M-series chips for Local AI processing tasks?

+

A:

GeForce RTX GPUs lead in raw AI performance, scalability, and ecosystem support, making them the preferred option

for advanced local AI tasks. Apple's M-series chips, while efficient and well-integrated for Mac workflows,

are best suited for lighter or platform-specific AI applications.